I love my Fitbit Charge HR [1]. It tracks my steps, it tracks my heartbeat. It tracks my sleep hours. Alas it does not keep me warm at night unless if malfunctions, bursts into flames then rudely singes my beard.

I’m keen to analyse my Fitbit data to gain some insight into my biology and behaviour. What days and times am I the most active? What drives my weight gain and loss? What do I need to do to get a decent night’s sleep? I will attempt to answer such questions through a series of Fitbit posts, each one taking a snapshot of the process from data to insights. This post is focussed on getting the Fitbit data, then wrangling it into tidy data for further visualisation and analysis.

Accessing my Fitbit data is made easy with the R package

fitbitScraper. I have been using my Fitbit since March 2015. I have been recording my weight via the Fitbit Aria Wi-Fi Smart Scale since the end of September 2015. I will analyse data from October 2015 (a complete month since recording my weight) to March 2016 (seven months). These are the data variables of interest:

- Weight

- Sleep

- Steps

- Distance

- Activity

- Calories burned

- Heartbeat.

I will focus on weight, steps and calories burned for this post.

Weight

After authenticating [2], assigning my start and end date, I applied the get_weight_data function and received the following output:

> get_weight_data(cookie, start_date = startDate, end_date = endDate)

time weight

1 2015-09-27 23:59:59 72.8

2 2015-10-04 23:59:59 73.6

3 2015-10-11 23:59:59 74.5

4 2015-10-18 23:59:59 74.5

5 2015-10-25 23:59:59 74.5

6 2015-11-01 23:59:59 74.0

7 2015-11-08 23:59:59 73.3

8 2015-11-15 23:59:59 73.4

9 2015-11-22 23:59:59 74.3

10 2015-11-29 23:59:59 73.1

11 2015-12-06 23:59:59 72.3

12 2015-12-13 23:59:59 72.3

13 2015-12-20 23:59:59 72.5

14 2015-12-27 23:59:59 73.0

15 2016-01-10 23:59:59 72.6

16 2016-01-17 23:59:59 72.8

17 2016-01-24 23:59:59 72.7

18 2016-01-31 23:59:59 72.5

19 2016-02-07 23:59:59 72.8

20 2016-02-14 23:59:59 72.7

21 2016-02-21 23:59:59 73.1

22 2016-02-28 23:59:59 73.5

23 2016-03-06 23:59:59 74.0

24 2016-03-13 23:59:59 73.8

25 2016-03-20 23:59:59 73.7

26 2016-03-27 23:59:59 75.0

27 2016-04-03 23:59:59 74.6

28 2016-04-10 23:59:59 74.6

I have more weights recorded which are not being captured. I tried setting the dates within a single month (March 2016) and it returned the following:

> get_weight_data(cookie, start_date = "2016-03-01", end_date = "2016-03-31")

time weight

1 2016-02-29 20:46:18 74.1

2 2016-03-01 21:04:14 74.4

3 2016-03-02 07:24:03 73.7

4 2016-03-02 21:10:52 73.9

5 2016-03-03 21:55:57 74.2

6 2016-03-08 20:09:37 74.0

7 2016-03-09 22:19:34 74.8

8 2016-03-10 20:06:33 73.4

9 2016-03-12 20:40:28 73.5

10 2016-03-13 21:03:40 73.3

11 2016-03-14 21:02:22 73.5

12 2016-03-15 22:13:07 73.4

13 2016-03-16 18:54:18 73.2

14 2016-03-17 21:02:32 74.4

15 2016-03-18 20:27:08 74.4

16 2016-03-20 18:21:45 73.1

17 2016-03-23 21:54:01 75.3

18 2016-03-24 20:03:48 75.4

19 2016-03-25 14:09:23 74.3

20 2016-03-27 20:18:27 74.9

21 2016-03-29 21:02:56 74.9

22 2016-03-30 22:08:01 74.9

23 2016-03-31 22:22:25 74.2

24 2016-04-02 22:29:36 74.5

Huzzah! All my March weights are visible (as verified by checking against the Fitbit app). I wrote code that loops through each month and binds all weight data. I will make this available on Github soonish. I noted duplicate dates in the data frame. That is, on some days I recorded my weight twice of a given day. As this analysis will focus on daily Fitbit data, I must have unique dates per variable prior to merging all the variables together. I removed the duplicate dates, keeping the weight recorded later on a given day since I tend to weigh myself at night. The final data frame contains two columns: Date (as class Date, not POSIXct) and Weight. Done.

Steps

Getting daily steps is easy with the get_daily_data function.

> dfSteps <- get_daily_data(cookie, what = "steps", startDate, endDate)

> head(dfSteps)

time steps

1 2015-10-01 7496

2 2015-10-02 7450

3 2015-10-03 4005

4 2015-10-04 2085

5 2015-10-05 3101

6 2015-10-06 10413

The date was coerced to class Date, columns renamed and that’s it.

Calories burned

Using the same get_daily_data function, I got the calories burned and intake data.

> dfCaloriesBurned <- get_daily_data(cookie, what = "caloriesBurnedVsIntake", startDate, endDate)

> head(dfCaloriesBurned)

time caloriesBurned caloriesIntake

1 2015-10-01 2428 2185

2 2015-10-02 2488 1790

3 2015-10-03 2353 2361

4 2015-10-04 2041 1899

5 2015-10-05 2213 2217

6 2015-10-06 4642 2474

As with the steps, the date was coerced to class Date and the colnames renamed. The function returns both the calories burned each day and the intake of calories. Calories intake is gathered items entered into the food log. I only recently stopped recording my food. For items that did not have a barcode or were easily identifiable in the database, I would resort to selecting the closest match and guestimating serving sizes. I was underestimating how much I consumed each day as I was often below my target calories intake, yet I gained weight. I stopped recording food and will wait for a sensor to be surgically embedded in my stomach that quantifies the calories I shove down there. I disregarding the calories intake variable.

Merging the data

The data frames for weight, steps and calories burned were merged using Date.

> df <- full_join(dfWeight, dfSteps, by = "Date")

> df <- full_join(df, dfCaloriesBurned, by = "Date")

> head(df)

Date Weight Steps CaloriesBurned

1 2016-03-31 74.2 10069 2622

2 2016-03-30 74.9 7688 2538

3 2016-03-29 74.9 4643 2180

4 2016-03-27 74.9 9097 2510

5 2016-03-25 74.3 11160 2777

6 2016-03-24 75.4 8263 2488

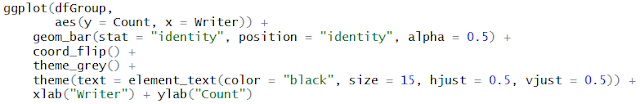

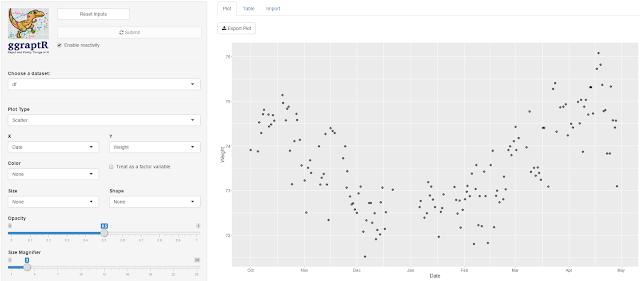

I now have tidy data for three Fitbit variables of daily data. Here’s a quick plot of Steps vs Calories burned.

There’s an obvious relationship since I burn calories with each step. Most of my activities involve making steps – walking, jogging, getting brownies, fleeing swooping birds. I do not clock-up steps when I kayak. I don’t know how Fitbit treats such activities. I’ll check the calorie count before and after next time.

Ultimately I’d like to observe whether any variables can account for outcomes such as weight and sleep. Here’s a Steps vs Weight plot.

There’s no relationship. It remains to be observed whether the inclusion of other Fitbit predictors could account for weight. More on this in future posts.

References and notes

1. I am not affiliated with Fitbit. I’m just a fan. I will however accept gifted Fitbit items if you would like to get in touch with me at willselloutfortech@gmail.com

2. Authenticate with the login function; login(email, password, rememberMe = FALSE). Use your email address and password you use to access your Fitbit dashboard online.